Hundred millionth changeset submitted: some graphs and stats

Posted by LucGommans on 25 February 2021 in English. Last updated on 26 February 2021.https://www.openstreetmap.org/changeset/100000000

How many people tried to get that milestone changeset?

How did the server cope with the load?

What was the peak number of changesets per second?

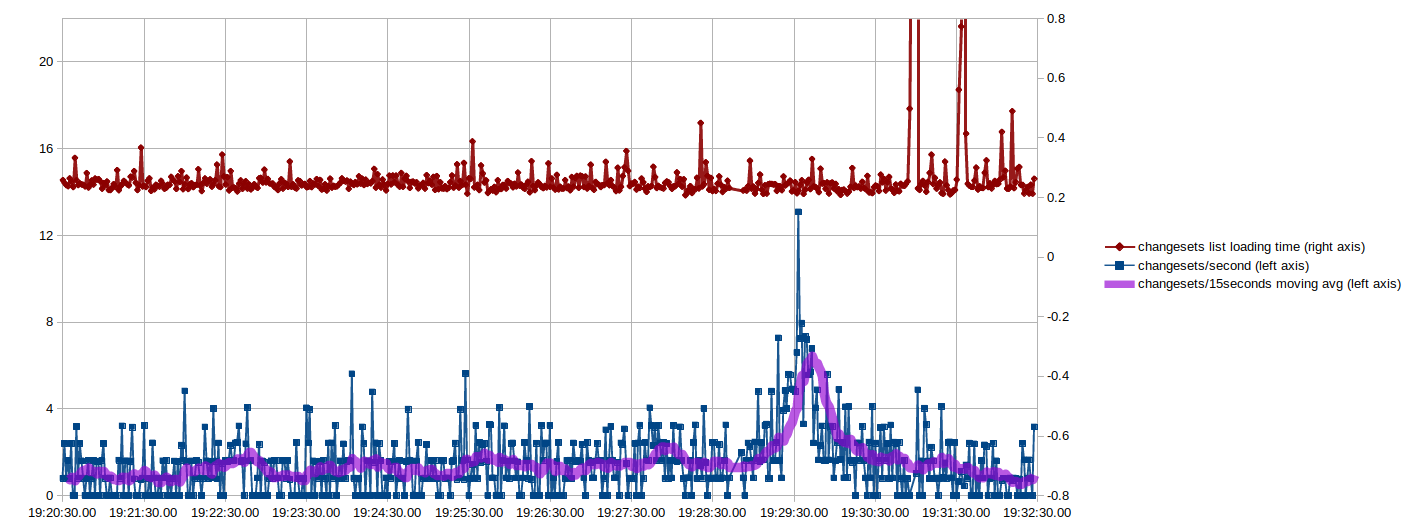

To monitor the changeset count and make my own attempt at getting the hundred millionth changeset, I had a bash oneliner running in a terminal that refreshed the count every 2 seconds and, after changeset 99 999 878, every second. These are the resulting graphs (click to enlarge):

The X-axis here is the time, which I think makes the most sense. Another interesting way of looking at it, though, is to plot the changeset number on the X-axis so you can more easily see where the 100 000 000th was and what happened before vs. after:

Note that this is a scatter plot, meaning that it plots the points on the X-axis equidistant. If there is a gap in time, it will plot points further apart, or if there is a jump in the changeset number, it will also plot points further apart. Since there were around 10 changesets per second for a few seconds, you can see it pulls those data points horizontally apart.

What was the peak number of changesets per second?

The data makes this quite easy: the 1.2 seconds preceding 19:29:33 saw the submission of 16 changesets, and 16÷1.2≈13 changesets per second.

How many people tried to get that milestone changeset?

Everything looks rather stable until we’re 300 changesets away from the milestone. Taking that data, changesets -790 through -300 (counting backwards from 100 million) took from 19:20:15.27 until 19:27:02.27 or 407 seconds for 490 changesets, or 1.204 changesets per second. The capturing ended at 19:32:27.41, at which time the +323rd changeset had been submitted: 1113 changesets in 732.14 seconds. During 732.14 seconds, we expected to see 881.5 changesets at the 1.2 rate, but instead we saw 1113. Some (most?) people submitted multiple attempts, but without doing deeper analysis on this already-overanalysed event, let’s say that 230 changesets came in extra. That’s a lot of changesets!

These edits were of course made before the milestone so the true rate will be off, but the true “what would have happened if this event hadn’t been tonight” is indeterminable. We could look at previous Thursday nights’ rates, seasonal influences, growth rates, and still have error margins. The people that I talked to seemed to have their changes lined up much longer than 10 minutes ahead (more like a few hours) so I don’t think this influenced the 1.204 figure much.

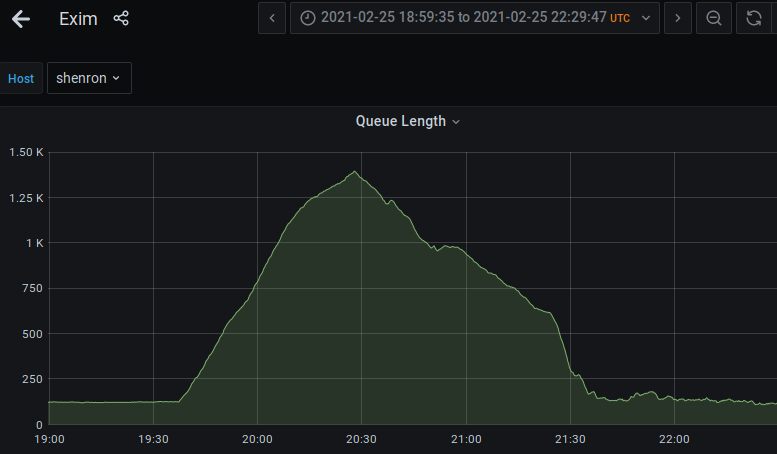

How did the server cope with the load?

Perfectly. I see no disturbance at all.

But wait, what’s up with Exim, the mail server?

The changesets were handled just fine, but emails are acting funky. Why are thousands of emails being sent in the first place? Well, people started congratulating Lamine Ndiaye on the changeset: each one sends one email. But everyone who replied also gets an email notification! So the second comment will trigger two emails (to the author and the first commenter), the third one three, etc. Within 30 minutes there were 65 comments, which would have triggered 2145 emails if nobody quickly unsubscribed. On Telegram I heard that someone on IRC asked to stop congratulating and, if you did, unsubscribe from the thread.

This isn’t even exponential growth, e.g. the fourth comment didn’t trigger 8 emails or anything, but still it created quite a storm. Interesting to see what behaviour is created during unusual times.

Making-of

The top two graphs were made with LibreOffice Calc, the bottom one is from a server monitor as linked by brabo in the Dutch OSM Telegram group.

Data collection was basically fetching the recent changesets page (you could find that link by looking at what page is requested when you click History on the homepage) about once a second. It’s fairly crude, it would be more accurate to download each changeset and look at the timestamp, but this was a quick way to also do an attempt at the milestone edit and afterwards I just used the data I already had. To actually turn this page into something useful, a little bit of Bash was used:

while :; do

curl -sS https://www.openstreetmap.org/history?list=1 | grep -o 'changeset_[0-9][0-9]*' | head -1 | cut -b 11- | tr -d \\n

date '+ %Y-%m-%d %H:%M:%S.%N %s.%N'

sleep 1

done

This will forever (while true; do) fetch the page, look for changeset_[digits], take the first one, cut off the changeset_, and remove the trailing newline. Because it’s not piped further, this will now be printed to the terminal. The second command prints the current date and time. Because we removed the newline from the previous command, it will appear on the same line, hence the starting space. The two date formats used are 2020-02-25 19:29:30.12345 and 1614390123.12345: the former is easier to read for me and can also be used in LibreOffice Calc, the latter is convenient for doing calculations on since it just counts the number of seconds since January 1st, 1970 (commonly called a unix timestamp). The terminal will show something like:

100037587 2021-02-26 10:35:55.801165148 1614332155.801165148

100037589 2021-02-26 10:35:57.268372774 1614332157.268372774

100037593 2021-02-26 10:35:58.752743477 1614332158.752743477

To get this into LibreOffice, you can either copy and paste the whole lines and have it be parsed as space-separated csv, or take the individual columns by pressing Ctrl and then selecting the text you want. This will let you select, for example, only the changeset numbers as a block without taking the rest of the line (at least in Gnome Terminal).

And from there it’s basic LibreOffice Calc usage. For example to get the number of changesets per second, if the changeset numbers are in column A and the unix timestamps in column B, insert into column C2: =(A2-A1)/(B2-B1)

And then drag it down or copy it down until it covers the whole range of values. This formula divides the number of changesets made by the amount of time elapsed between the two requests.

Converting the time format, e.g. in column D, to GMT would be: =D1-TIME(1,0,0) if you’re in GMT+1 (if you’re in +1, you need to subtract one hour to get to GMT).

In case anyone wants to do the more accurate changeset submission time method, that would probably look something like:

for id in {999999000..100001000}; do

wget https://www.openstreetmap.org/api/0.6/changeset/$id -O changeset-$id.xml

created_at="$(... parse the xml, grab field osm.changeset[created_at] ...)"

echo "$id,$created_at" >> output.csv

done

Of course, for very large volumes it might be better to download a planet file with history, but for just a couple hundred changesets around some event this is presumably better.

Let me know if you could use some help obtaining or analysing the data!

By the way, don’t miss the OpenStreetMap blog entry:

This milestone represents the collective contribution of nearly 1 billion features globally in the past 16+ years, by a diverse community of over 1.5 million mappers.

https://blog.openstreetmap.org/2021/02/25/100-million-edits-to-openstreetmap/

Discussion

Comment from LucGommans on 25 February 2021 at 23:57

So what ever happened to this?

My changeset ended up being number 99 999 991. I overestimated how many people would be going for it.

The numbers tell me that some hundred people tried, which I guess is quite a lot if each of them aim for a single number (being at ‘991 is not so bad for a semi-unprepared attempt methinks!). I also saw the numbers starting to speed up after we hit 99 999 900, but I sat tight until the moment that I had planned (hitting submit after ‘983). Alas, it still landed early. I really thought at least a few persons would have tried to script it and would be submitting a sufficient number that my request would be competing for HTTP processing time and, thus, that it would make sense to try to submit (by hand) around 17 changesets before the hundred millionth, but that does not seem to have been the case :)

Comment from Harry Wood on 26 February 2021 at 00:18

hehe. That is quite funny. The servers all coped perfectly …until people started congratulating her!

I’m glad the 100 Millionth Edit wasn’t somebody’s boring automated thing.

Comment from LucGommans on 26 February 2021 at 00:27

As a hacker (in the broad sense of the word), automating things is the expected route for me. I’m surprised so many people feel like that would have been cheating or boring (“may the best script win” is what I’m used to and people fight tooth and nail to get on pixelfluts!). Not that I disagree or don’t understand, it just both surprises me that nobody automated it and that people seem to near-universally feel this way. That’s good to keep in mind for the next milestone and makes me glad I wasn’t well prepared, now it was all equal :)

Comment from Mateusz Konieczny on 26 February 2021 at 00:42

The same for me. I was 200 changesets early (stack of StreetComplete edits made on survey today, with autosaving disabled).

Comment from Mateusz Konieczny on 26 February 2021 at 00:43

I worried that it may be vandalism or paid editor. The most ironic edit would be DWG revert bot :)

Comment from seav on 26 February 2021 at 04:54

I’m pretty sure it’s easy to spin a vandalism or revert edit positively. But I’m really happy that the changeset is in Africa and by a committed mapper who was nominated for an OSM Award in 2017.

Comment from LucGommans on 26 February 2021 at 09:18

Same! The edit I did to try and get the milestone edit was actually in South America, near a city where a good friend lives. But an OSM Award nominee and non-western edit is of course even better :)

Comment from brabo on 26 February 2021 at 15:50

i had 3 small changesets ready that i uploaded in the last seconds, and got as close as 99999992.

very happy with the milestone edit being of someone who has been so long dedicated mapper!

Comment from FrankOverman on 28 February 2021 at 12:35

Tnx Luc, very interesting reading material. Esp the Making Of is nice to see some decent shell scripting. For me has been a while, but surely I will come back and do some closer reading. Mvg, Frank